Hi,

I have written many calcs in the Essbase for as many different business cases with different volumes up to 100 gigs . Most challenging issues that I have faced in the Essbase world of calculations w.r.to data integrity or performance somehow directly or indirectly related to block creation issues.

So I would like to bring some of experiences in this regard into this article on this cloudy Hyderabad Saturday evening.

First we will see when the blocks are created:

Data Load-- datablocks are created when data is loaded to sparse member combinations hat did not previously exist. The blocks are created upon input whether loaded using a source file and load rule or loaded from a spreadsheet using lock and send.

Sparse Rollup-- data blocks are created when sparse dimensions are rolled up as specified in the outline using a CALC ALL, CALC DIM or other calculation function.

Datacopy-- datablocks are created when the DATACOPY command is executed in a calc script.

Member Formula-- datablocks may be created under certain circumstances as a result of a member formula. eg: Bud=Act+Act*0.1 This functionality is enabled when the Create Blocks On Equations checkbox is checked in the Calculation Group of the Database Settings dialog or SET CREATEBLOCKONEQ ON/ SET CREATENONMISSINGBLK ON in the calc script.

--- First three cases blocks are created natural with no effort. And the fourth one is troublesome and this whole conversation is all about.

Why we are worried about this?

1) Logic seems good. Code seems good. But it will create severe data integrity issues.

2) Some times it will not be found in the testing also.

3) A change which was implemented in the middle of the year works good till Dec and suddenly it will create issue in Jan as the year got changed. (In a case where period is dense and year is sparse)

4) Complex matter in Essbase to digest and handle even for the Consultants who had deep experience and understanding of Essbase.

5) Performance will become very worse If we apply the known remedies as a safety measure in all the possible cases with out identifying the existence of issue.

What Essbase DBAG says:

Constant Values

You can assign a constant value to a member: Florida = 120;

In this formula, California is a member in a sparse dimension and 120 is a constant value. Analytic Services automatically creates all possible data blocks for California and assigns the value 120 to all data cells. Many thousands of data blocks may be created.

--- This is also clear. No Issues in this point also.

Non-Constant Values

If you assign anything other than a constant to a member in a sparse dimension, and no data block exists for that member, new blocks may not be created unless Analytic Services is enabled to create blocks on equations.

For example, to create blocks for West that didn’t exist prior to running the calculation, you need to enable Create Blocks on Equations for this formula:

Florida = “New York” + 120;

You can enable Create Blocks on Equations at the database level whereby blocks are always created, or you can control block creation within calculation scripts.

Will study this in detailed

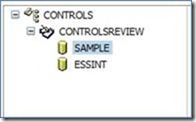

Sample/Basic (Most Popular and Favorite) is the cube for our study.

We all know what’s dense and what’s sparse in this cube.

Dense: Measures, Year and Scenario | Sparse: Market and Product

Have loaded the sample data calcdat.txt and cleared the blocks for Folrida using the below calc.

//ESS_LOCALE English_UnitedStates.Latin1@Binary

Fix(Florida)

ClearBlock All;

Endfix

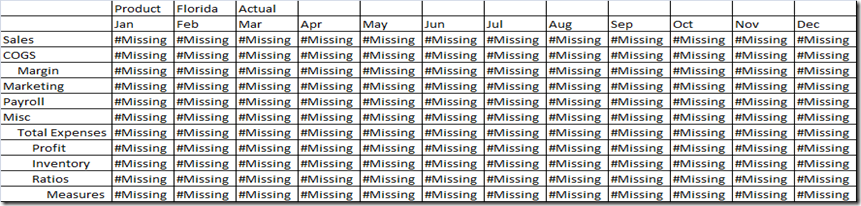

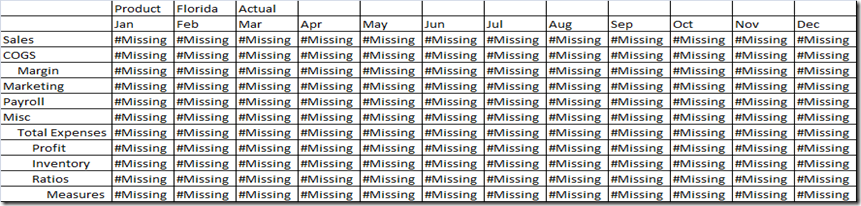

After that below is the snapshot of data where all the blocks for Florida got cleared.

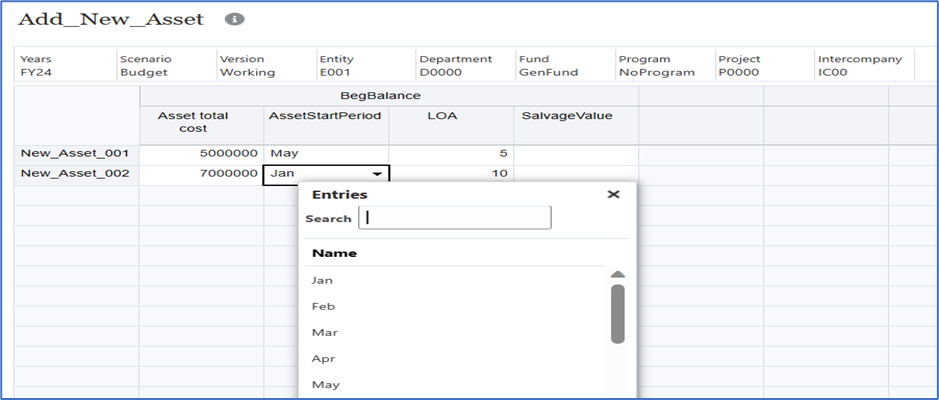

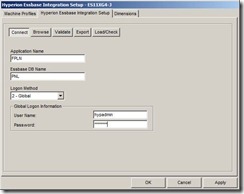

See below that Global Database setting “Create blocks on equations” set to Off. (This is the default).

We will write a calc to calculate the data for Florida from that of New York with out Create blocks on equations turned on in the calc.

//ESS_LOCALE English_UnitedStates.Latin1@Binary

Fix(Actual)

Florida="New York"+120;;

Endfix

This calc should not work as defined in the DBAG as there is no blocks for Florida.

Run this calc and see the results. Surprisingly we can see the results as per the calc logic.

Is this constant value (120) is making magic even in the formula.

Clear again the blocks for Florida.

Will write a calc with formula with two non constant operands and explicitly turn off the create blocks on equations in the calc.

//ESS_LOCALE English_UnitedStates.Latin1@Binary

SET CREATEBLOCKONEQ OFF;

Fix(Actual)

Florida="Utah"+"West";

Endfix

Run this calc and see the results. Calc is done again.

Is the block creation issue a myth? Shall we conclude there is no block creation issue?

No. No. It is there and it should be there. Otherwise we don’t have any work.

Will clear again and rewrite the calc with dense member formulae for the target of Florida where blocks doesn’t exists.

//ESS_LOCALE English_UnitedStates.Latin1@Binary

Fix("Actual","Florida")

"Sales"="Utah"->"Sales" + 120;

"COGS"="Utah"->"COGS" + 120;

Endfix

Sales and COGS for Florida are expected to be calculated from that Utah. Nothing calculated here.

Happy to see that Block creation issues is there. What happened?

By default, Essbase applies dense-member formulas only to existing data blocks. As there exists no blocks for Florida, no calculation happened here.

How this can be resolved? By updating the calc with SET CREATENONMISSINGBLK ON.

//ESS_LOCALE English_UnitedStates.Latin1@Binary

SET CREATENONMISSINGBLK ON;

Fix("Actual","Florida")

"Sales"="Utah"->"Sales" + 120;

"COGS"="Utah"->"COGS" + 120;

Endfix

Issue resolved. Calculation is done.

What the SET CREATENONMISSINGBLK ON will do?

SET CREATENONMISSINGBLK ON enables Essbase to create potential blocks in memory where the dense-member formulas are performed. Of these potential blocks, Essbase writes to the database only blocks that contain values; blocks resulting in only #MISSING are not written to the database.

In order to create new blocks, setting SET CREATENONMISSINGBLK to ON requires Essbase to anticipate the blocks that will be created. Working with potential blocks can affect calculation performance.

The Create Blocks on Equations setting is ignored when this setting turned on.

Is this good or any issues with this?

It will create potential blocks in memory even though it will write and store only the non missing blocks after calculation. This is a very resource intensive algorithm that will hamper the performance of the calc.

Better approach is to rewrite this as a sparse member formula.

//ESS_LOCALE English_UnitedStates.Latin1@Binary

SET CREATEBLOCKONEQ OFF;

SET MSG SUMMARY;

Fix("Actual","Sales", "COGS")

"Florida"="Utah"+ 120;

Endfix

Clear the blocks again and run this calc.

With SET CREATEBLOCKONEQ OFF, this calc should not work. But the results are fine exactly matching with that of dense formula by SET CREATENONMISSINGBLK ON.

As per Essbase DBAG, Essbase will not create a block when a formula assigns a non-constant value to a sparse dimension member for which no block exists with SET CREATEBLOCKONEQ OFF.

Will see the application log

[Sat Aug 04 14:53:59 2012]Local/Sample/Basic/admin@Native Directory/8168/Info(1200481)

Formula for member [Florida] will be executed in [TOPDOWN] mode

[Sat Aug 04 14:53:59 2012]Local/Sample/Basic/admin@Native Directory/8168/Info(1012668)

Calculating [ Market(Florida)] with fixed members [Measures(Sales, COGS); Scenario(Actual)]

[Sat Aug 04 14:53:59 2012]Local/Sample/Basic/admin@Native Directory/8168/Info(1012672)

Calculator Information Message:

Maximum Number of Lock Blocks: [100] Blocks

Completion Notice Messages: [Disabled]

Calculations On Updated Blocks Only: [Enabled]

Clear Update Status After Full Calculations: [Enabled]

Calculator Cache: [Disabled]

[Sat Aug 04 14:53:59 2012]Local/Sample/Basic/admin@Native Directory/8168/Info(1012677)

Calculating in serial

[Sat Aug 04 14:53:59 2012]Local/Sample/Basic/admin@Native Directory/8168/Info(1012672)

Calculator Information Message:

Total Block Created: [0.0000e+000] Blocks

Sparse Calculations: [1.8000e+001] Writes and [7.2000e+001] Reads

Dense Calculations: [0.0000e+000] Writes and [0.0000e+000] Reads

Sparse Calculations: [0.0000e+000] Cells

Dense Calculations: [0.0000e+000] Cells

[Sat Aug 04 14:53:59 2012]Local/Sample/Basic/admin@Native Directory/8168/Info(1012579)

Total Calc Elapsed Time for [CALF0.csc] : [0.144] seconds

[Sat Aug 04 14:53:59 2012]Local/Sample/Basic/admin@Native Directory/8168/Info(1013274)

Calculation executed

See from the log, Formula calculation is happening in Top-Down for Florida.

Essbase uses one of two calculation methods to do a full calculation of a database outline: bottom-up calculation or top-down calculation. By default, Essbase does a bottom-up calculation.

If the database outline contains a complex member formula, Essbase performs a top-down calculation for the relevant member.

Will Force the Formula bottom up and see the results again.

//ESS_LOCALE English_UnitedStates.Latin1@Binary

SET CREATEBLOCKONEQ OFF;

SET FRMLBOTTOMUP ON;

SET MSG SUMMARY;

Fix("Actual","Sales", "COGS")

"Florida"="Utah"+ 120;

Endfix

Clear again the blocks for Florida and run this calc. See nothing is done. No blocks created even for the sparse member formula.

[Sat Aug 04 15:01:24 2012]Local/Sample/Basic/admin@Native Directory/7628/Info(1012668)

[Sat Aug 04 15:01:24 2012]Local/Sample/Basic/admin@Native Directory/7628/Info(1012668)

Calculating [ Market(Florida)] with fixed members [Measures(Sales, COGS); Scenario(Actual)]

[Sat Aug 04 15:01:24 2012]Local/Sample/Basic/admin@Native Directory/7628/Info(1012672)

Calculator Information Message:

Maximum Number of Lock Blocks: [100] Blocks

Completion Notice Messages: [Disabled]

Calculations On Updated Blocks Only: [Enabled]

Clear Update Status After Full Calculations: [Enabled]

Calculator Cache: [Disabled]

[Sat Aug 04 15:01:24 2012]Local/Sample/Basic/admin@Native Directory/7628/Info(1012677)

Calculating in serial

[Sat Aug 04 15:01:24 2012]Local/Sample/Basic/admin@Native Directory/7628/Info(1012672)

Calculator Information Message:

Total Block Created: [0.0000e+000] Blocks

Sparse Calculations: [0.0000e+000] Writes and [0.0000e+000] Reads

Dense Calculations: [0.0000e+000] Writes and [0.0000e+000] Reads

Sparse Calculations: [0.0000e+000] Cells

Dense Calculations: [0.0000e+000] Cells

[Sat Aug 04 15:01:24 2012]Local/Sample/Basic/admin@Native Directory/7628/Info(1012579)

Total Calc Elapsed Time for [CALF0.csc] : [0] seconds

[Sat Aug 04 15:01:24 2012]Local/Sample/Basic/admin@Native Directory/7628/Info(1013274)

Calculation executed

See the log. No Top-Down Calculation and no read and no write.

Turn on the Create Blocks on equation and see the results.

//ESS_LOCALE English_UnitedStates.Latin1@Binary

SET CREATEBLOCKONEQ ON;

SET FRMLBOTTOMUP ON;

SET MSG SUMMARY;

Fix("Actual","Sales", "COGS")

"Florida"="Utah"+ 120;

Endfix

[Sat Aug 04 15:09:16 2012]Local/Sample/Basic/admin@Native Directory/6768/Info(1012668)

[Sat Aug 04 15:09:16 2012]Local/Sample/Basic/admin@Native Directory/6768/Info(1012668)

Calculating [ Market(Florida)] with fixed members [Measures(Sales, COGS); Scenario(Actual)]

[Sat Aug 04 15:09:16 2012]Local/Sample/Basic/admin@Native Directory/6768/Info(1012672)

Calculator Information Message:

Maximum Number of Lock Blocks: [100] Blocks

Completion Notice Messages: [Disabled]

Calculations On Updated Blocks Only: [Enabled]

Clear Update Status After Full Calculations: [Enabled]

Calculator Cache: [Disabled]

[Sat Aug 04 15:09:16 2012]Local/Sample/Basic/admin@Native Directory/6768/Info(1012672)

Calculator Information Message:

Create Blocks on Equations: [Enabled]

[Sat Aug 04 15:09:16 2012]Local/Sample/Basic/admin@Native Directory/6768/Info(1012677)

Calculating in serial

[Sat Aug 04 15:09:16 2012]Local/Sample/Basic/admin@Native Directory/6768/Info(1012672)

Calculator Information Message:

Total Block Created: [3.6000e+001] Blocks

Sparse Calculations: [1.8000e+001] Writes and [3.6000e+001] Reads

Dense Calculations: [0.0000e+000] Writes and [0.0000e+000] Reads

Sparse Calculations: [0.0000e+000] Cells

Dense Calculations: [0.0000e+000] Cells

[Sat Aug 04 15:09:16 2012]Local/Sample/Basic/admin@Native Directory/6768/Info(1012579)

Total Calc Elapsed Time for [CALF0.csc] : [0.123] seconds

[Sat Aug 04 15:09:16 2012]Local/Sample/Basic/admin@Native Directory/6768/Info(1013274)

Calculation executed

Log shows - Create Blocks on Equations: [Enabled]. This helped in getting the calc done.

What SET CREATEBLOCKONEQ ON will do? What Technical Reference says?

If calculations result in a value for a sparse dimension member for which no block exists, Essbase creates a block. Sometimes, new blocks are not desired; for example, when they contain no other values. The Create Blocks on Equation setting is designed for situations when blocks would be created as a result of assigning something other than a constant to a member of a sparse dimension.

What actually it is? Which is better CREATEBLOCKONEQ/ CREATENONMISSINGBLK ?

This will create blocks if and only if the RHS expression can result in a value. Will create blocks automatically if the Sparse Formula calculation is default Top-Down with out Create Blocks on Equations: [Enabled].

Create Blocks on Equations should be enabled for the specific piece of calc having sparse member formula if the calc is globally forcing the formula bottom up or Essbase cfg setting CALCOPTFRMLBOTTOMUP set to TRUE to optimize the sparse calculations.

CREATEBLOCKONEQ/ CREATENONMISSINGBLK – Both these settings have the performance impact because of the processing overhead. But CREATEBLOCKONEQ is better compared to CREATENONMISSINGBLK as the earlier creates only the required blocks but the later creates potential blocks while calculating but store only the non missing blocks. CREATENONMISSINGBLK will have much impact on the calc performance.

Hope covered as much as I can. Feeling tired. Logging out here. Thanks.

![clip_image002[4] clip_image002[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjYJWe-1d5MYXa1luhwkOd6-knG0nl7eKdcqesdOUZIL2rqjGzh9dwJ3b7z78tCvLku3zGcUkF2wkCdMfWpJ84OchlKQH6LisRZclCP2vC-mhnj572m7a-7k9AZRZNvgx15C3nD_AXllFg//?imgmax=800)

![clip_image004[4] clip_image004[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjyCLnFdPkob10vuGOcjqR_eTtNlcYDm-sHHv7-mTncDmY4BY6r98Kbbb9fSKLqqIJcZGxJGYFCkP6AezxErNrsu1C6aHxgLWl8ANS8VMeeXNen0Nr0vzCGG4-Qsok1E35N9f7wE7GcHSw//?imgmax=800)

![clip_image006[4] clip_image006[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjzqsNo5D8khkLRHau_OSLQBXJt84eGL7jRcd0l9pHdOa3duLEeudLrzyd80sGilonGMEesMm4mgGsitSVJM2OCdJQZPQ2Bbma-nlwLsrTyQVkz_6-5H7UuKZFdPxr-UcpG2MKYD6MtmPg//?imgmax=800)

![clip_image008[4] clip_image008[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhb-jwKdKYs54u1dmaqptJK5RffXXPhXCaDqpyREZdMnEnUbHUgMu8YWnCtWmJzyAKn0Jy8jyRRZKxbW0WeoGbMByJFuKiuWetjhF-DoTGPYf2t95Asmf7RgUocU1k42OkBrlL7ybCV-3w//?imgmax=800)

![clip_image010[4] clip_image010[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEj0dT2Ypd41e85oYtiBEnhhMlGGpbRUc84mzgBVk6X3-YT6A5EQ-F7Kxnfeds3sJWQICqOup6e-5YK69Q1tBYTGaAwfMcArSunT_hNhk63xoF4_N7NamPPeTwV-RZkLZDI4HKE8wFPkCK4//?imgmax=800)

![clip_image002[4] clip_image002[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjvjwElDqxPJp-95qKjV7nxlqErX4qD4unAReVcfr01DEvlwLk3n81rCKD-Ew7TTtCRLJHUJDCPJgphEDaUGnX4ywTss26t1bJqzF1stbLQGqhywVIfTAr8mRkSiDBH6ifGEAuQPp2OuTM//?imgmax=800)

![clip_image004[6] clip_image004[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjHOAlWNReGoAzIoHrmGe8pt_4TsGAyEujln3V7cC3hQoVQk7M5R4LSfVsL2UHKe_qcNy7dJiYJIWKjdfwGnQwx6FW2Yq3gQX8ZtZabRCltQtQDTzhSVuzBDNs5Z-T42Mt3L4jOZBV_gb4//?imgmax=800)

![clip_image006[6] clip_image006[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgO0V8m7Sgs77tGBvx_F4UkbartHEZiNRprxoQmb8AjX74c_tYoH6wySGnZfGxiip7Tl3ZYueuM5A-6DCC6lHduCHjzXYlJBmk8KQtqy2RButwDStRxLCAKirX4tp33IyevtKKhKzbwgdg//?imgmax=800)

![clip_image008[6] clip_image008[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiCd3Im4cBAZMxRy9iEp0Nwl0NqEZKOP7nxvpDoIn5zoSG5slWxgIQPMkX087BJOZXakI2TVyAJKdQaxZHoi_d02Kt3LkNPHbri08UKzeIveI9PvOOP4WuUvOsj2ff6nwQFrbtzTSQl4Tg//?imgmax=800)

![clip_image002[6] clip_image002[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgB1cmmDxz_agTGdsQ2gQVlS5KN9FHBoQW93WpIj1e6e6K8K5tO-jgwAYQvLtsqQmrcAcj3QchebY9V-UcYPhqmi8MWTArshl8b09ODTPtciXzsEbTAj80AreUlCgpMfLH-x1VNkTbzA2M//?imgmax=800)

![clip_image004[8] clip_image004[8]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEg9AOTc52ieZVwsWtauHRnoOd-6ID7UVBJDikrKI8wP3Gw5OO-pqMMpSxJWNtWn4rEW_T0xA71seRjGQ0Y886eNtIGERpWkZjHcuf8TkeXhQMBUBE409V5eo7E_bh2JaKP83L0takSVMyg//?imgmax=800)

![clip_image006[8] clip_image006[8]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhmw0z5q0gg_NkYoA3Hr-8j6gLECGPbChnPrcnfIY7lPQ089KjB2hyphenhyphenE6FCA5tbdp-TnLcxr1I1pNzsQRQi_jdxe5wMhG_hBMJo0mS6xihchqYYPehNUd6XltQppJsQH1BPnBG5LmvzN7hE//?imgmax=800)

![clip_image002[8] clip_image002[8]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgESOfR4u-_tzBmJCYNTp9hvdLefW9NZrvit7-IkFoJ3leOqNKnWAH4t3idzRvfKHt-Gv0V27yKVIPl5XvrLlhLXa9IRzPNmzWtQh2qGCMEY73kkQLA4awypPSr_is4OPxMQ6V8H7RqMEc//?imgmax=800)

![clip_image004[10] clip_image004[10]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEip7VMiZEvPay0ZhINSkfTet3JzdcaYu6uxQmgW4ELRcqawTDdISfMJsd5RmSClGguk3E2xfCbVImFqb9gZR4zOqC1EaR-wJDAjBu-gOzM-XyKO0I17go5QpxsSILOmABc47Af0aNkrFqg//?imgmax=800)

![clip_image006[10] clip_image006[10]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEie_yGcV2q-LKTth9Za5kG6OtuhNyXv44xWoI3pnwEa1qCVRG-Hhfb3DgB72PbfLJ9T_IulOXeVYL__YZNbGH-kJh5oB9mBqzcxo2N87rU94u1vzyBtZCTyauI3WHx6DN96uVy5OJ67StY//?imgmax=800)

![clip_image008[8] clip_image008[8]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhs7bkrTavkRVxgCEjotN5PtJD2oaoNZFEvVQz4n1IkzBF2xSFGVgc2dTt0VLiDKj60Ka_VRy7fH5kGncyRtAXpPZGLHBJ8AV3SHxXaXRcWkrjcJxMcZ6G3TWNIUP6NpvFIyfvIXV9127U//?imgmax=800)

![clip_image010[6] clip_image010[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEj4zS6nb4cpOSLf4QdAH5_W6-Vdgxcafs6-PBvNjlfidbFxnyvm0vlA70iT_5JkVjBEJFO95XqizZNd46DkpD-w1n9feFAGyg9GxKUy0U3lUnXoiY6c5IoxHunvaZMF0ccAtkOFsnHPhYE//?imgmax=800)

![clip_image012[4] clip_image012[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhdeQ1eKmEmjKOTbJjugllpVE0kl2nAOZIBJfZSIFmukH3kv88q8KLiKVVxk76CpfV2mV7Y2ASMI_hKg7CWg-3bP6W9qiYejvDMYAOsHZ48scsAFn3WOiF8X1Vch8xVD3Bqx08RbuImUP0//?imgmax=800)

![clip_image014[4] clip_image014[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEitZq9hUVC5hJNABTwGOHho5PeFqddZjQLkQ1TBxM-eiYGXpmBmQhFSaVt5oaonVsP_PcSpV6WoUvVlJkd7kqrXw7vxdeF2NvIWpUBowfTAoGrlxxCwZOSZKxrbrzoiQkjo5MwALWuHkzM//?imgmax=800)

![clip_image016[4] clip_image016[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEizhymgs9f4pqZ4cShhYfBeR2yOf7pYtYBMVxwRb4oX8N3vT0nB8-IHtjsCFEp2qvXykWaief2vb-pz96gXj_fazFJhXUd3vPCqQs17IgmYr_JC2j5cV7doXlYbFKFTFW89WF5epEGBmJM//?imgmax=800)